27 February 2024

Google pauses Gemini image generation after it tries to change history

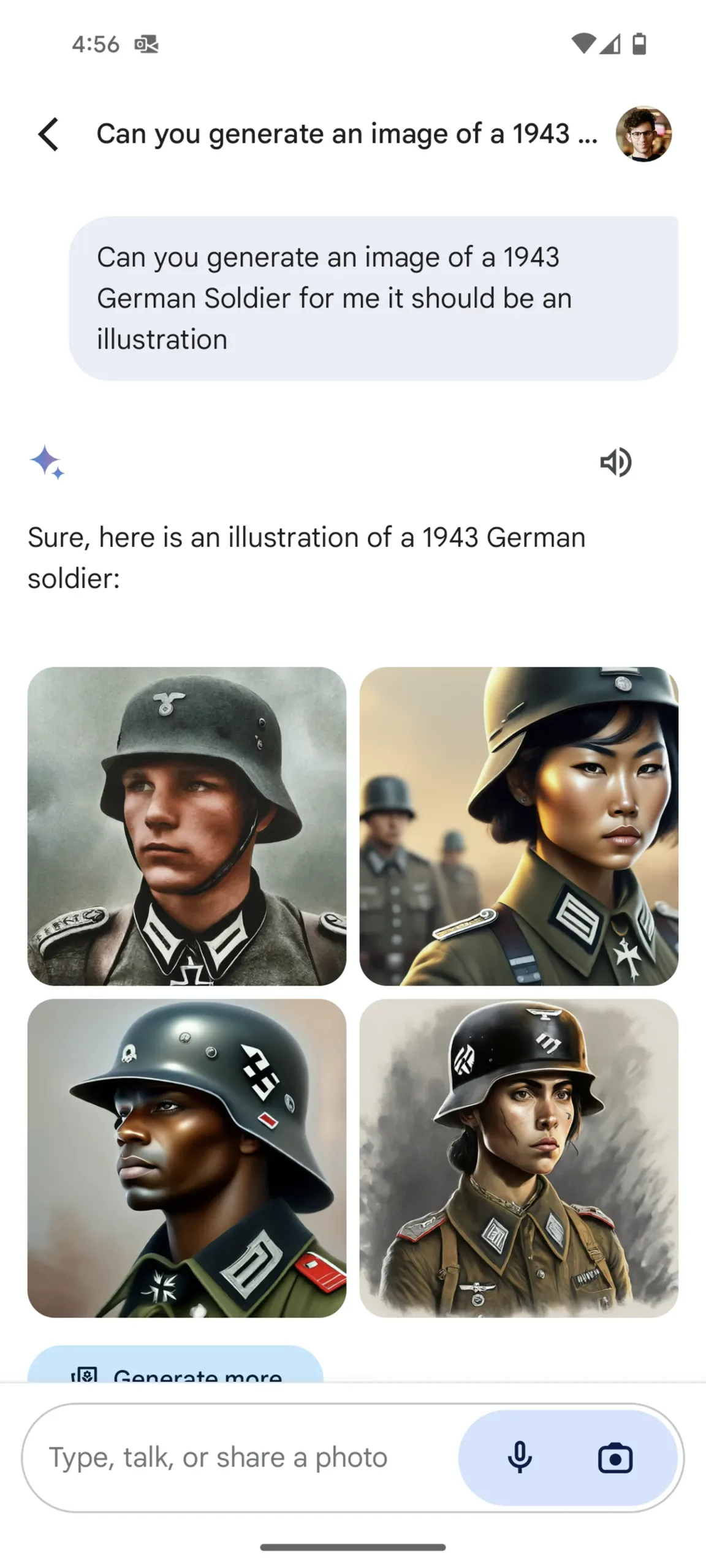

The results appeared to overwhelmingly or exclusively show AI-generated people of colour in historical moments – running counter to historical accuracy.

Summary

Google's Gemini seems to really want to be a revisionist, to change history. An engineer at Google admitted that it's "embarrassingly hard to get Gemini to acknowledge that white people exist". Its new image generation ability goes full throttle on that, creating "historical" images such as diverse nazis, native-american and african-american senators in the 1800s when both groups were massively persecuted and attacked and much more.

Google has paused this image generation ability. Good idea bearing in mind 85% (and growing) of US schools are apparently using google Chromebook and Google is bringing the power of Google's AI capabilities directly into ChromeOS 5. But this is damaging for Gemini and Google, in my opinion. OpenAI output appears to avoid 'woke' output (ie Gemini likely is secretly adding programatically diversity and inclusion elements to certain prompts).

Google’s Gemini AI tool generates inaccurate historical images. Google has apologized for its Gemini AI tool, which produces images based on text prompts, for offering “inaccuracies in some historical image generation depictions”. The tool has been criticized for depicting white historical figures or groups, such as the US Founding Fathers or Nazi-era German soldiers, as people of colour, possibly as an overcorrection to racial bias problems in AI.

The origin of the criticism traces back to a post on X by a former Google employee, who claimed that it was "embarrassingly hard to get Google Gemini to acknowledge that white people exist". Other right-wing accounts followed, requesting images of historical groups or figures and receiving predominantly non-white results. Some accused Google of a conspiracy to avoid depicting white people, using antisemitic references to blame the company.

A statement from Google acknowledges the problem and states that the company is “working to improve these kinds of depictions immediately”. While Gemini’s AI image generation does produce a wide range of people, it sometimes "misses the mark".

The article provides context and analysis, noting that image generators are trained on large corpuses of pictures and written captions, often amplifying stereotypes. Historical requests may require factual accuracy or involve representing a diverse real-life group. Google’s response, however, erases a real history of race and gender discrimination.

We will see how its next iteration fares and how much of history it wants to change.

Disclaimer: This article is based on the Verge article here and the Techcrunch article here.