A Shift to the Creative Curation Process

We expect students will be doing more of this in the future, as a result of the developments in the Generative AI Platforms

We at TELC believe that this article will be relevant to students of the following disciplines (although that's sure to increase with time):

- Multimedia

- Video Production

- Art History and Art Curation

- Architecture

- Graphic Design

In summary, this is the learning process through which the student would go:

- Go to a comprehensive database such as https://www.midlibrary.io/ and study one or more art styles by various world-known artist both historical and current.

- Take one or multiple images from said database and put it/them into a platform that can generate prompts using an image you upload or link into it.

- Once the prompt is generated, put that prompt into AI image generators such as Midjourney, StableDIffusion, Dream Studio.

- Study the result using both the original uploaded/linked image and the current images you generated.

- Generate further variations and continue to study the results in detail.

To see the full guide, click the tab below.

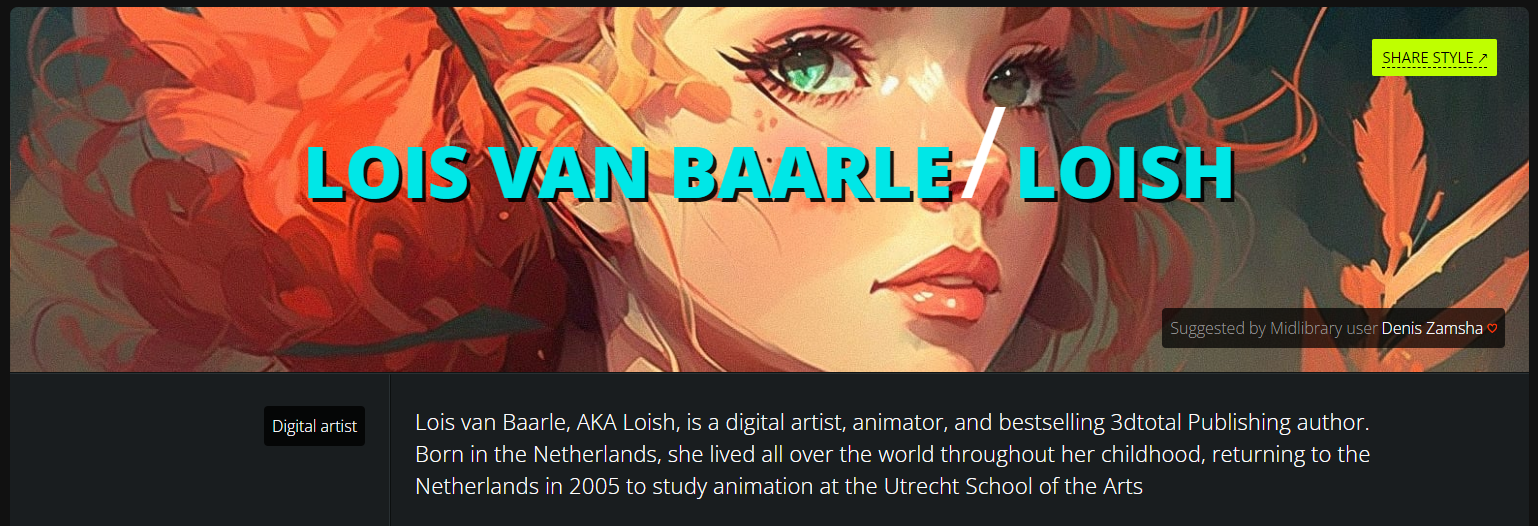

Step 1: Finding the source of inspiration

In my case, I went to https://www.midlibrary.io/styles/lois-van-baarle and studied the work of Van Baarle, an illustrator I like. I quite like the stylised realism aspects he adopts and uses.

I decided to go with this image (which was generated by an AI and put on that platform):

I took that image and tried two methods:

1- Cut off one of the designs and put it into an image prompt generator (such as this one: https://huggingface.co/spaces/fffiloni/CLIP-Interrogator-2)

2- Use the 4block image itself.

Both yielded extremely similar results.

As you can see, it generated a pretty accurate prompt and even recognised the style of the artist. The only mistake I found is that it inserted "trending on artstation" as a prompt part, which was kinda weird.

I then took the prompt and threw it into both Midjourney and StableDiffusion, here are the results:

Midjourney:

StableDiffusion:

Both tools nailed the style of the artist and the mood, including some of the techniques he/she uses, although it's clear that Midjourney is much more advanced currently. It also produces higher resolution images by default, so that helps.

So now that I have the original library artworks and the generated ones, I can sit and analyse them. Coupled with research into the artist and the actual methods and techniques he/she uses to draw, I can learn not only about his art, but about digital drawing in general, not to mention I can begin to understand the deeper workings of how something like Midjourney generates images of a specific style (or other things in my prompt).

As such, I will exponentially increase my knowledge, and if this is coupled with further iterations and experimentation on my part, who knows how much more I'll learn, as a student of let's say Art Curation, or Multimedia. And you can make the same arguments for architecture students if the subject was related to that profession, instead of digital illustration.

That said, there will always be doomsayers, there are arguments that this will be the death of art, like this Tweet by Chet Bliss:

(By the way, that image to text generator in Midjourney currently seems to not work 100% of the time)

And we disagree with what he claims, similarly to what happened in the automotive industry when they began automating a lot of processes of making cars, new fields started popping up to support them, we believe this will have a similar effect - it will create new fields.